In case you run into this like I did

Useful Links:

A VERY Good Performance Tuning Doc: https://calomel.org/freebsd_network_tuning.html

https://forum.opnsense.org/index.php?topic=6590.0

http://virtualvillage.cloud/?p=177

http://www.cadc-ccda.hia-iha.nrc-cnrc.gc.ca/netperf/tuning-tcp.shtml

http://web.synametrics.com/app?operation=blog&st=dispblog&fn=00000000019_1413406728705&BloggerName=mike

https://www.freebsd.org/doc/en_US.ISO8859-1/books/handbook/configtuning-kernel-limits.html

https://lists.freebsd.org/pipermail/freebsd-performance/2009-December/003909.html

https://proj.sunet.se/E2E/tcptune.html

And another one:

http://45drives.blogspot.com/2016/05/how-to-tune-nas-for-direct-from-server.html

https://www.softnas.com/docs/softnas/v3/html/performance_tuning_for_vmware_vsphere_print.html

https://johnkeen.tech/freenas-11-2-and-esxi-6-7-iscsi-tutorial/

https://www.vmware.com/content/dam/digitalmarketing/vmware/en/pdf/techpaper/vmware-multipathing-configuration-software-iscsi-port-binding-white-paper.pdf

https://www.ixsystems.com/community/threads/iscsi-multipathing-slower-then-single-path-for-writes.22475/

https://forums.servethehome.com/index.php?threads/esxi-6-5-with-zfs-backed-nfs-datastore-optane-latency-aio-benchmarks.24099/

https://www.vmware.com/content/dam/digitalmarketing/vmware/en/pdf/techpaper/performance/vsphere-esxi-vcenter-server-67-performance-best-practices.pdf

http://austit.com/faq/323-enable-pnic-rss

http://www.virtubytes.com/2017/10/12/esxcli-nic-driver-firmware/

https://kb.vmware.com/s/article/50106444

https://gtacknowledge.extremenetworks.com/articles/Solution/000038545

https://bogiip.blogspot.com/2018/06/replace-esxi-ixgben-driver-with-ixbge.html

https://tinkertry.com/how-to-install-intel-x552-vib-on-esxi-6-on-superserver-5028d-tn4t

https://www.ixsystems.com/community/threads/10gbe-esxi-6-5-vmxnet-3-performance-is-poor-with-iperf3-tests.63173/

https://www.virtuallyghetto.com/2016/03/quick-tip-iperf-now-available-on-esxi.html

https://superuser.com/questions/959222/freebsd-turning-off-offloading

https://www.ixsystems.com/community/threads/bottleneck-in-iscsi-lans-speed.24363/

https://www.cyberciti.biz/faq/freebsd-command-to-find-sata-link-speed/

https://www.vmware.com/content/dam/digitalmarketing/vmware/en/pdf/whitepaper/iscsi_design_deploy-whitepaper.pdf

https://www.reddit.com/r/zfs/comments/8l20f5/zfs_record_size_is_smaller_really_better/

http://open-zfs.org/wiki/Performance_tuning

https://jrs-s.net/2019/04/03/on-zfs-recordsize/

https://www.reddit.com/r/zfs/comments/7pfutp/zfs_pool_planning_for_vm_storage/dsh1u3j/

https://www.reddit.com/r/zfs/comments/8pm7i0/8x_seagate_12tb_in_raidz2_poor_readwrite/

H730:

Note: H730 works in pass-through mode BUT, you will see dmesg report incorrect SAS/SATA speeds (150.00 MB/s) of drives on boot in FreeNAS. This isn’t erroneous, these are the settings the drives will operate at no matter what the H730 says in the BIOS/Device Config that the drive negotiated speed at.

Go buy an HBA330! This problem will go away, FreeNAS will report the correct speed. There is currently no way to flash a H730 away from Dell firmware to LSI (IT Firmware)… (Feb 2020, If you are reading this and have found a way, please comment!)

edit /boot/loader.conf and add: hw.pci.honor_msi_blacklist=0

https://bugs.freebsd.org/bugzilla/show_bug.cgi?id=203874https://redmine.ixsystems.com/issues/26733

If anyone is still looking to resolve this issue, it’s not an mrsas driver issue. It’s apparently a blacklist that still exists in FreeBSD to disable MSI-X when it detects it’s running in VMware due to some old limitation that used to exist in VMware. To find this work around I noticed the “mrsas0 MSI-x setup failed” in dmesg and how it would fall back to legacy interrupts. So I chased down MSI-x and VMware and came across the first link in bugs.freebsd.org. I believe this affects mrsas and all HBAs that use MSI-x.

I have confirmed this working on the following setup:

Dell R430

Perc H730 configured in HBA mode, cache disabled, passthrough via vmware

VMware vSphere 6.7

FreeNAS 11.2 U3 test VM with 10 vcpus and 16GB RAM.

To install FreeNAS first time either configure with 1 vcpu, then increase after installation and editing loader.conf, or detach the HBA, install FreeNAS and attach HBA after editing loader.conf post install.

Note: Changing /boot/loader.conf does not always work for some, however adding the variable to the system tunables did work.

Optane 900P:

Credit: https://redmine.ixsystems.com/issues/26508#note-62

I found a simple fix for this issue by adding the Optane 900P device ID to passthru.map

– ssh to ESXi

– edit /etc/vmware/passthru.map

– add following lines at the end of the file:

# Intel Optane 900P

8086 2700 d3d0 false

– restart hypervisor

I can now pass through the 900P to Freenas 11.3 without issue

To over-provision the free space on the drives down to max ZIL size of 16 GB and assign write cache to this partition (Leaving free unallocated space on the drive to allow for higher write performance consistency and wear leveling by internal Intel firmware). This has the added benefit of using another 16 GB partition on the drive as another ZIL for another pool if you’d like, just understand, “What eggs you are putting in what basket” if a drive should fail. In the below example, each pool would get it’s own two partitions from the 900P’s in a mirrored config. But be warned, there is only so much bandwidth per device and all partitions need to share this bandwidth, so you may run into issues with write contention should more than one pool slam the devices with writes at a time.

Credit: https://www.ixsystems.com/community/threads/sharing-slog-devices-striped-ssds-partitioned-for-two-pools.62787/

PoolX

-nvd0p1

-nvd1p1

PoolY

-nvd0p2

-nvd1p2

etc…

# Make sure drives have all blocks unallocated, this is destructive!!!

# Blocks on drive must be completely clear to allow wear leveling to see them as "free space"

# Make sure you have the right device! PCIe devices may not enumerate in the order you expect

gpart destroy -F nvd0

gpart destroy -F nvd1

gpart create -s GPT nvd0

gpart create -s GPT nvd1

gpart add -t freebsd-zfs -a 1m -l sloga0 -s 16G nvd0

gpart add -t freebsd-zfs -a 1m -l slogb0 -s 16G nvd1

# To read drive partition IDs

glabel status | grep nvd0

#Output

# gpt/sloga0 N/A nvd0p1

#gptid/b47f75d5-4d17-11ea-aa55-0050568b4d0b N/A nvd0p1

glabel status | grep nvd1

#Output

# gpt/slogb0 N/A nvd1p1

#gptid/eff3f7f8-4d18-11ea-aa55-0050568b4d0b N/A nvd1p1

#Then use these IDs to add drives to pool (not currently available via 11.3 FreeNAS UI)

zpool add tank log mirror gptid/b47f75d5-4d17-11ea-aa55-0050568b4d0b gptid/eff3f7f8-4d18-11ea-aa55-0050568b4d0b

# If you had a second pool

gpart add -t freebsd-zfs -a 1m -l sloga1 -s 16G nvd0

gpart add -t freebsd-zfs -a 1m -l slogb1 -s 16G nvd1

# Then continue to find drive ID's and add them to the second pool, etc...

# Helpful commands

gpart show -l nvd0

=> 40 937703008 nvd0 GPT (447G)

40 2008 - free - (1.0M)

2048 33554432 1 sloga0 (16G)

33556480 904146568 - free - (431G)

gpart show -l nvd2

=> 40 937703008 nvd2 GPT (447G)

40 2008 - free - (1.0M)

2048 33554432 1 slogb0 (16G)

33556480 904146568 - free - (431G)

Intel X520-DA2 Tweaks (ESXi):

[root@SilverJet:~] esxcli network nic get -n vmnic0

Advertised Auto Negotiation: false

Advertised Link Modes: 10000BaseTwinax/Full

Auto Negotiation: false

Cable Type: DA

Current Message Level: -1

Driver Info:

Bus Info: 0000:01:00:0

Driver: ixgben

Firmware Version: 0x800003df

Version: 1.7.1.16

Link Detected: true

Link Status: Up

Name: vmnic0

PHYAddress: 0

Pause Autonegotiate: false

Pause RX: true

Pause TX: true

Supported Ports: DA

Supports Auto Negotiation: false

Supports Pause: true

Supports Wakeon: false

Transceiver:

Virtual Address: 00:50:56:d5:94:70

Wakeon: None

[root@SilverJet:~] esxcli software vib install -v https://cdn.tinkertry.com/files/net-ixgbe_4.5.3-1OEM.600.0.0.2494585.vib --no-sig-check

Installation Result

Message: The update completed successfully, but the system needs to be rebooted for the changes to be effective.

Reboot Required: true

VIBs Installed: INT_bootbank_net-ixgbe_4.5.3-1OEM.600.0.0.2494585

VIBs Removed: INT_bootbank_net-ixgbe_4.4.1-1OEM.600.0.0.2159203

VIBs Skipped:

[root@SilverJet:~] reboot

[root@SilverJet:~] esxcli system module set -e=true -m=ixgbe

[root@SilverJet:~] esxcli system module set -e=false -m=ixgben

[root@SilverJet:~] reboot

[root@SilverJet:~] esxcli network nic get -n vmnic0

Advertised Auto Negotiation: false

Advertised Link Modes: 10000BaseT/Full

Auto Negotiation: false

Cable Type: DA

Current Message Level: 7

Driver Info:

Bus Info: 0000:01:00.0

Driver: ixgbe

Firmware Version: 0x800003df, 1.2074.0

Version: 4.5.3-iov

Link Detected: true

Link Status: Up

Name: vmnic0

PHYAddress: 0

Pause Autonegotiate: false

Pause RX: true

Pause TX: true

Supported Ports: FIBRE

Supports Auto Negotiation: false

Supports Pause: true

Supports Wakeon: false

Transceiver: external

Virtual Address: 00:50:56:d5:94:70

Wakeon: None

All in One (AIO) Considerations:

Make sure you MATCH the MAC of the virtual NICs in the FreeNAS VM to the vmkernel port group with the proper subnet for your storage network! If you do not, and add a second or third NIC to the VM, FreeBSD will enumerate the NICs randomly; your vmx0 that used to have the web interface may now become vmx1, against your inclinations!

iSCSI:

Credit (Page 12): https://www.vmware.com/content/dam/digitalmarketing/vmware/en/pdf/techpaper/vmware-multipathing-configuration-software-iscsi-port-binding-white-paper.pdf

If your iSCSI speed really sucks (Like 40 MB/s over a 10 Gbps link) or you are getting failed vMotions, chances are you’ve got paths that aren’t actually paths…

iSCSI Re-login:

If you have an already established iSCSI session before port binding configuration, you can remove the existing iSCSI sessions and log in again for the port binding configuration to take effect.

Note: vmhbaXX is the software iSCSI adapter vmhba ID

To list the existing iSCSI sessions, run the following command:

esxcli iscsi session list --adapter vmhbaXX

To remove existing iSCSI sessions, run the following command

MAKE SURE YOU DO NOT HAVE ACTIVE IO:

esxcli iscsi session remove --adapter vmhbaXX

To enable sessions as per the current iSCSI configuration, run the following command:

esxcli iscsi session add --adapter vmhbaXX

Or all at once:

esxcli iscsi session remove --adapter vmhba64 && esxcli iscsi session add --adapter vmhba64

ESXi 6 supports 512 logical and physical block size only. ESXi 6.5 supports logical 512 and physical 512 or 4096 size only. In FreeNAS you must disable physical block size reporting on the iSCSI extent.

VMware iSCSI best practice guide:

http://www.vmware.com/content/dam/digitalmarketing/vmware/en/pdf/whitepaper/iscsi_design_deploy-whitepaper.pdf

Using MPIO with FreeNAS and VMware ESXi you should NOT use VMkernal port binding for iSCSI.

Read here: https://kb.vmware.com/selfservice/microsites/search.do?cmd=displayKC&docType=kc&externalId=2038869

For each ESXi cluster that will be sharing a LUN, you would create a portal group in FreeNAS that has “extra portal IP” configured on it for each interface on FreeNAS that is to be used for iSCSI traffic. – TODO: Research

On the ESXi side, you would NOT create VMkernal port binding configuration. You would simply add each of the portal IP’s to the static discovery targets. Dynamic discovery works too in ESXi 6.7.

Use Jumbo frames if supported by your network equipment. Slight performance increase here.

Set ZFS sync option on zvol to “sync=always” for data in flight safety. This will slow down your performance a bit, but can be mitigated by using a very low latency device as your zpool SLOG. Like an Optane 900P.

If using FreeNAS 9.10+, Delay Ack:enabled, https://bugs.pcbsd.org/issues/15920 – TODO: Research

Before connecting a FreeNAS LUN to your ESXi hosts, run the following command on each ESXi host and then reboot and connect to the LUNs. This changes the VMware roundrobin PSP to change paths every operation. Good for small environments, no need for large environments.

esxcli storage nmp satp rule add -s "VMW_SATP_ALUA" -V "FreeNAS" -M "iSCSI Disk" -P "VMW_PSP_RR" -O "iops=1" -c "tpgs_on" -e "FreeNAS array with ALUA support"

Or now with the TrueNAS rename:

esxcli storage nmp satp rule add -s "VMW_SATP_ALUA" -V "TrueNAS" -M "iSCSI Disk" -P "VMW_PSP_RR" -O "iops=1" -c "tpgs_on" -e "TrueNAS array with ALUA support"

Adjusting the IOPS parameter:

Credit: https://kb.vmware.com/s/article/2069356

To adjust the IOPS parameter from the default 1000 to 1, run this command (May not be required if creating a nmp rule in ESXi, I’ve noticed that if you set RoundRobin like below and reboot host, without the rule above set, the iSCSI LUNs revert to non-RoundRobin):

In ESXi 5.x/6.x/7.x:

for i in `esxcfg-scsidevs -c |awk '{print $1}' | grep naa.xxxx`; do esxcli storage nmp psp roundrobin deviceconfig set --type=iops --iops=10 --device=$i; done

Where, .xxxx matches the first few characters of your naa IDs. To verify if the changes are applied, run this command:

esxcli storage nmp device list

You see output similar to:

Path Selection Policy: VMW_PSP_RR

Path Selection Policy Device Config: {policy=iops,iops=1,bytes=10485760,useANO=0;lastPathIndex=1: NumIOsPending=0,numBytesPending=0}

Path Selection Policy Device Custom Config:

Working Paths: vmhba33:C1:T4:L0, vmhba33:C0:T4:L0

In case you see a number of iSCSI login/logout on the target side, do this on the ESXi host (https://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=2133286):

Disable the SMART daemon (smartd). However, this affects local data capture of SMART data for internal drives.

Note: VMware recommends against disabling smartd if possible.

/etc/init.d/smartd stop

chkconfig smartd off

Helpful commands:

zpool status tank

zpool iostat -v tank -t 1

zfs-stats -a

zfs set redundant_metadata=most tank

zfs get all tank

zfs get all tank/vmware_iscsi

zpool remove tank mirror-6 <Log Device Mirror; get with status on pool>

fio --name=seqwrite --rw=write --direct=0 --iodepth=32 --bs=128k --numjobs=8 --size=8G --group_reporting

iostat -xz -w 10 -c 10

Results:

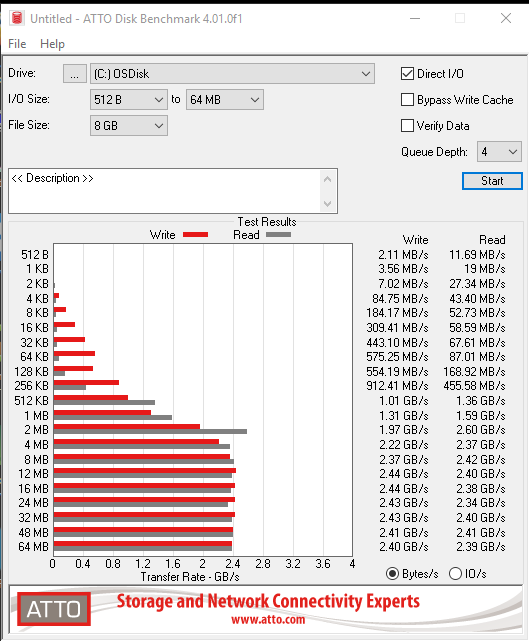

So finally I figured a few things out! A little tuning goes a long way, no sync=disabled required (sync=always set on pool and zvol), iSCSI MPIO windows bench below, most tuning params below are default:

root@freenas[/mnt/tank]# fio --name=seqwrite --rw=write --direct=0 --iodepth=32 --bs=1M --numjobs=8 --size=8G --group_reporting

seqwrite: (g=0): rw=write, bs=(R) 1024KiB-1024KiB, (W) 1024KiB-1024KiB, (T) 1024KiB-1024KiB, ioengine=psync, iodepth=32

...

fio-3.16

Starting 8 processes

seqwrite: Laying out IO file (1 file / 8192MiB)

seqwrite: Laying out IO file (1 file / 8192MiB)

seqwrite: Laying out IO file (1 file / 8192MiB)

seqwrite: Laying out IO file (1 file / 8192MiB)

seqwrite: Laying out IO file (1 file / 8192MiB)

seqwrite: Laying out IO file (1 file / 8192MiB)

seqwrite: Laying out IO file (1 file / 8192MiB)

seqwrite: Laying out IO file (1 file / 8192MiB)

Jobs: 4 (f=4): [_(2),W(1),_(1),W(1),_(1),W(2)][97.4%][w=434MiB/s][w=434 IOPS][eta 00m:02s]

seqwrite: (groupid=0, jobs=8): err= 0: pid=2686: Mon Mar 16 13:41:52 2020

write: IOPS=872, BW=873MiB/s (915MB/s)(64.0GiB/75083msec)

clat (usec): min=706, max=5811.9k, avg=8590.36, stdev=87062.16

lat (usec): min=732, max=5811.0k, avg=8679.94, stdev=87063.26

clat percentiles (usec):

| 1.00th=[ 1172], 5.00th=[ 1713], 10.00th=[ 2147],

| 20.00th=[ 3130], 30.00th=[ 3818], 40.00th=[ 4490],

| 50.00th=[ 5538], 60.00th=[ 6915], 70.00th=[ 8455],

| 80.00th=[ 10028], 90.00th=[ 11863], 95.00th=[ 13435],

| 99.00th=[ 19006], 99.50th=[ 22414], 99.90th=[ 141558],

| 99.95th=[ 834667], 99.99th=[4462740]

bw ( MiB/s): min= 15, max= 3272, per=100.00%, avg=1120.06, stdev=78.10, samples=925

iops : min= 11, max= 3272, avg=1117.79, stdev=78.16, samples=925

lat (usec) : 750=0.01%, 1000=0.48%

lat (msec) : 2=8.03%, 4=24.23%, 10=47.40%, 20=19.06%, 50=0.66%

lat (msec) : 100=0.01%, 250=0.03%, 500=0.02%, 750=0.01%, 1000=0.01%

lat (msec) : 2000=0.01%, >=2000=0.04%

cpu : usr=1.06%, sys=26.40%, ctx=841741, majf=0, minf=0

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,65536,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=32

Run status group 0 (all jobs):

WRITE: bw=873MiB/s (915MB/s), 873MiB/s-873MiB/s (915MB/s-915MB/s), io=64.0GiB (68.7GB), run=75083-75083msec

root@freenas[~]# zfs-stats -a

------------------------------------------------------------------------

sysctl: unknown oid 'kstat.zfs.misc.arcstats.l2_writes_hdr_miss'

sysctl: unknown oid 'kstat.zfs.misc.arcstats.recycle_miss'

sysctl: unknown oid 'kstat.zfs.misc.zfetchstats.bogus_streams'

sysctl: unknown oid 'kstat.zfs.misc.zfetchstats.colinear_hits'

sysctl: unknown oid 'kstat.zfs.misc.zfetchstats.colinear_misses'

sysctl: unknown oid 'kstat.zfs.misc.zfetchstats.reclaim_failures'

sysctl: unknown oid 'kstat.zfs.misc.zfetchstats.reclaim_successes'

sysctl: unknown oid 'kstat.zfs.misc.zfetchstats.streams_noresets'

sysctl: unknown oid 'kstat.zfs.misc.zfetchstats.streams_resets'

sysctl: unknown oid 'kstat.zfs.misc.zfetchstats.stride_hits'

sysctl: unknown oid 'kstat.zfs.misc.zfetchstats.stride_misses'

ZFS Subsystem Report Mon Mar 16 13:36:28 2020

----------------------------------------------------------------------

System Information:

Kernel Version: 1103000 (osreldate)

Hardware Platform: amd64

Processor Architecture: amd64

FreeBSD 11.3-RELEASE-p6 #0 r325575+d5b100edfcb(HEAD): Fri Feb 21 18:53:26 UTC 2020 root

1:36PM up 41 mins, 2 users, load averages: 0.07, 0.33, 0.94

----------------------------------------------------------------------

System Memory Statistics:

Physical Memory: 130934.25M

Kernel Memory: 1258.42M

DATA: 96.34% 1212.49M

TEXT: 3.65% 45.93M

----------------------------------------------------------------------

ZFS pool information:

Storage pool Version (spa): 5000

Filesystem Version (zpl): 5

----------------------------------------------------------------------

ARC Misc:

Deleted: 30

Recycle Misses: 0

Mutex Misses: 338

Evict Skips: 338

ARC Size:

Current Size (arcsize): 61.95% 73001.27M

Target Size (Adaptive, c): 81.04% 95502.93M

Min Size (Hard Limit, c_min): 13.42% 15825.16M

Max Size (High Water, c_max): ~7:1 117836.95M

ARC Size Breakdown:

Recently Used Cache Size (p): 93.75% 89533.99M

Freq. Used Cache Size (c-p): 6.25% 5968.93M

ARC Hash Breakdown:

Elements Max: 3668687

Elements Current: 99.81% 3661799

Collisions: 1543019

Chain Max: 0

Chains: 346056

ARC Eviction Statistics:

Evicts Total: 180546560

Evicts Eligible for L2: 97.74% 176477184

Evicts Ineligible for L2: 2.25% 4069376

Evicts Cached to L2: 34813390336

ARC Efficiency

Cache Access Total: 24117584

Cache Hit Ratio: 83.83% 20219405

Cache Miss Ratio: 16.16% 3898179

Actual Hit Ratio: 83.19% 20065299

Data Demand Efficiency: 59.37%

Data Prefetch Efficiency: 46.46%

CACHE HITS BY CACHE LIST:

Most Recently Used (mru): 37.18% 7518270

Most Frequently Used (mfu): 62.05% 12547029

MRU Ghost (mru_ghost): 1.07% 217132

MFU Ghost (mfu_ghost): 0.03% 6092

CACHE MISSES BY DATA TYPE:

Demand Data: 94.30% 3676086

Prefetch Data: 4.57% 178270

Demand Metadata: 1.04% 40822

Prefetch Metadata: 0.07% 3001

----------------------------------------------------------------------

L2 ARC Summary:

Low Memory Aborts: 2

R/W Clashes: 0

Free on Write: 21

L2 ARC Size:

Current Size: (Adaptive) 113743.66M

Header Size: 0.00% 0.00M

L2 ARC Read/Write Activity:

Bytes Written: 249373.65M

Bytes Read: 4089.92M

L2 ARC Breakdown:

Access Total: 3888009

Hit Ratio: 5.26% 204685

Miss Ratio: 94.73% 3683324

Feeds: 2364

WRITES:

Sent Total: 100.00% 1851

----------------------------------------------------------------------

VDEV Cache Summary:

Access Total: 15732

Hits Ratio: 53.22% 8373

Miss Ratio: 46.77% 7359

Delegations: 1439

f_float_divide: 0 / 0: division by 0

f_float_divide: 0 / 0: division by 0

f_float_divide: 0 / 0: division by 0

f_float_divide: 0 / 0: division by 0

----------------------------------------------------------------------

File-Level Prefetch Stats (DMU):

DMU Efficiency:

Access Total: 8795774

Hit Ratio: 0.35% 31426

Miss Ratio: 99.64% 8764348

Colinear Access Total: 0

Colinear Hit Ratio: 0.00% 0

Colinear Miss Ratio: 0.00% 0

Stride Access Total: 0

Stride Hit Ratio: 0.00% 0

Stride Miss Ratio: 0.00% 0

DMU misc:

Reclaim successes: 0

Reclaim failures: 0

Stream resets: 0

Stream noresets: 0

Bogus streams: 0

----------------------------------------------------------------------

ZFS Tunable (sysctl):

kern.maxusers=8519

vfs.zfs.vol.immediate_write_sz=32768

vfs.zfs.vol.unmap_sync_enabled=0

vfs.zfs.vol.unmap_enabled=1

vfs.zfs.vol.recursive=0

vfs.zfs.vol.mode=2

vfs.zfs.sync_pass_rewrite=2

vfs.zfs.sync_pass_dont_compress=5

vfs.zfs.sync_pass_deferred_free=2

vfs.zfs.zio.dva_throttle_enabled=1

vfs.zfs.zio.exclude_metadata=0

vfs.zfs.zio.use_uma=1

vfs.zfs.zio.taskq_batch_pct=75

vfs.zfs.zil_slog_bulk=786432

vfs.zfs.zil_nocacheflush=0

vfs.zfs.zil_replay_disable=0

vfs.zfs.version.zpl=5

vfs.zfs.version.spa=5000

vfs.zfs.version.acl=1

vfs.zfs.version.ioctl=7

vfs.zfs.debug=0

vfs.zfs.super_owner=0

vfs.zfs.immediate_write_sz=32768

vfs.zfs.cache_flush_disable=0

vfs.zfs.standard_sm_blksz=131072

vfs.zfs.dtl_sm_blksz=4096

vfs.zfs.min_auto_ashift=12

vfs.zfs.max_auto_ashift=13

vfs.zfs.vdev.def_queue_depth=32

vfs.zfs.vdev.queue_depth_pct=1000

vfs.zfs.vdev.write_gap_limit=0

vfs.zfs.vdev.read_gap_limit=32768

vfs.zfs.vdev.aggregation_limit_non_rotating=131072

vfs.zfs.vdev.aggregation_limit=1048576

vfs.zfs.vdev.initializing_max_active=1

vfs.zfs.vdev.initializing_min_active=1

vfs.zfs.vdev.removal_max_active=2

vfs.zfs.vdev.removal_min_active=1

vfs.zfs.vdev.trim_max_active=64

vfs.zfs.vdev.trim_min_active=1

vfs.zfs.vdev.scrub_max_active=2

vfs.zfs.vdev.scrub_min_active=1

vfs.zfs.vdev.async_write_max_active=10

vfs.zfs.vdev.async_write_min_active=1

vfs.zfs.vdev.async_read_max_active=3

vfs.zfs.vdev.async_read_min_active=1

vfs.zfs.vdev.sync_write_max_active=10

vfs.zfs.vdev.sync_write_min_active=10

vfs.zfs.vdev.sync_read_max_active=10

vfs.zfs.vdev.sync_read_min_active=10

vfs.zfs.vdev.max_active=1000

vfs.zfs.vdev.async_write_active_max_dirty_percent=60

vfs.zfs.vdev.async_write_active_min_dirty_percent=30

vfs.zfs.vdev.mirror.non_rotating_seek_inc=1

vfs.zfs.vdev.mirror.non_rotating_inc=0

vfs.zfs.vdev.mirror.rotating_seek_offset=1048576

vfs.zfs.vdev.mirror.rotating_seek_inc=5

vfs.zfs.vdev.mirror.rotating_inc=0

vfs.zfs.vdev.trim_on_init=1

vfs.zfs.vdev.bio_delete_disable=0

vfs.zfs.vdev.bio_flush_disable=0

vfs.zfs.vdev.cache.bshift=16

vfs.zfs.vdev.cache.size=16384

vfs.zfs.vdev.cache.max=16384

vfs.zfs.vdev.validate_skip=0

vfs.zfs.vdev.max_ms_shift=38

vfs.zfs.vdev.default_ms_shift=29

vfs.zfs.vdev.max_ms_count_limit=131072

vfs.zfs.vdev.min_ms_count=16

vfs.zfs.vdev.max_ms_count=200

vfs.zfs.vdev.trim_max_pending=10000

vfs.zfs.txg.timeout=120

vfs.zfs.trim.enabled=1

vfs.zfs.trim.max_interval=1

vfs.zfs.trim.timeout=30

vfs.zfs.trim.txg_delay=2

vfs.zfs.space_map_ibs=14

vfs.zfs.spa_allocators=4

vfs.zfs.spa_min_slop=134217728

vfs.zfs.spa_slop_shift=5

vfs.zfs.spa_asize_inflation=24

vfs.zfs.deadman_enabled=1

vfs.zfs.deadman_checktime_ms=60000

vfs.zfs.deadman_synctime_ms=600000

vfs.zfs.debug_flags=0

vfs.zfs.debugflags=0

vfs.zfs.recover=0

vfs.zfs.spa_load_verify_data=1

vfs.zfs.spa_load_verify_metadata=1

vfs.zfs.spa_load_verify_maxinflight=10000

vfs.zfs.max_missing_tvds_scan=0

vfs.zfs.max_missing_tvds_cachefile=2

vfs.zfs.max_missing_tvds=0

vfs.zfs.spa_load_print_vdev_tree=0

vfs.zfs.ccw_retry_interval=300

vfs.zfs.check_hostid=1

vfs.zfs.mg_fragmentation_threshold=85

vfs.zfs.mg_noalloc_threshold=0

vfs.zfs.condense_pct=200

vfs.zfs.metaslab_sm_blksz=4096

vfs.zfs.metaslab.bias_enabled=1

vfs.zfs.metaslab.lba_weighting_enabled=1

vfs.zfs.metaslab.fragmentation_factor_enabled=1

vfs.zfs.metaslab.preload_enabled=1

vfs.zfs.metaslab.preload_limit=3

vfs.zfs.metaslab.unload_delay=8

vfs.zfs.metaslab.load_pct=50

vfs.zfs.metaslab.min_alloc_size=33554432

vfs.zfs.metaslab.df_free_pct=4

vfs.zfs.metaslab.df_alloc_threshold=131072

vfs.zfs.metaslab.debug_unload=0

vfs.zfs.metaslab.debug_load=0

vfs.zfs.metaslab.fragmentation_threshold=70

vfs.zfs.metaslab.force_ganging=16777217

vfs.zfs.free_bpobj_enabled=1

vfs.zfs.free_max_blocks=18446744073709551615

vfs.zfs.zfs_scan_checkpoint_interval=7200

vfs.zfs.zfs_scan_legacy=0

vfs.zfs.no_scrub_prefetch=0

vfs.zfs.no_scrub_io=0

vfs.zfs.resilver_min_time_ms=3000

vfs.zfs.free_min_time_ms=1000

vfs.zfs.scan_min_time_ms=1000

vfs.zfs.scan_idle=50

vfs.zfs.scrub_delay=4

vfs.zfs.resilver_delay=2

vfs.zfs.top_maxinflight=128

vfs.zfs.delay_scale=500000

vfs.zfs.delay_min_dirty_percent=98

vfs.zfs.dirty_data_sync_pct=95

vfs.zfs.dirty_data_max_percent=10

vfs.zfs.dirty_data_max_max=17179869184

vfs.zfs.dirty_data_max=17179869184

vfs.zfs.max_recordsize=1048576

vfs.zfs.default_ibs=15

vfs.zfs.default_bs=9

vfs.zfs.zfetch.array_rd_sz=1048576

vfs.zfs.zfetch.max_idistance=67108864

vfs.zfs.zfetch.max_distance=33554432

vfs.zfs.zfetch.min_sec_reap=2

vfs.zfs.zfetch.max_streams=8

vfs.zfs.prefetch_disable=0

vfs.zfs.send_holes_without_birth_time=1

vfs.zfs.mdcomp_disable=0

vfs.zfs.per_txg_dirty_frees_percent=30

vfs.zfs.nopwrite_enabled=1

vfs.zfs.dedup.prefetch=1

vfs.zfs.dbuf_cache_lowater_pct=10

vfs.zfs.dbuf_cache_hiwater_pct=10

vfs.zfs.dbuf_metadata_cache_overflow=0

vfs.zfs.dbuf_metadata_cache_shift=6

vfs.zfs.dbuf_cache_shift=5

vfs.zfs.dbuf_metadata_cache_max_bytes=2074236096

vfs.zfs.dbuf_cache_max_bytes=4148472192

vfs.zfs.arc_min_prescient_prefetch_ms=6

vfs.zfs.arc_min_prefetch_ms=1

vfs.zfs.l2c_only_size=0

vfs.zfs.mfu_ghost_data_esize=289089536

vfs.zfs.mfu_ghost_metadata_esize=5746176

vfs.zfs.mfu_ghost_size=294835712

vfs.zfs.mfu_data_esize=1775566848

vfs.zfs.mfu_metadata_esize=1676800

vfs.zfs.mfu_size=7225051136

vfs.zfs.mru_ghost_data_esize=25292875264

vfs.zfs.mru_ghost_metadata_esize=0

vfs.zfs.mru_ghost_size=25292875264

vfs.zfs.mru_data_esize=67462772224

vfs.zfs.mru_metadata_esize=123261440

vfs.zfs.mru_size=68306886144

vfs.zfs.anon_data_esize=0

vfs.zfs.anon_metadata_esize=0

vfs.zfs.anon_size=140993536

vfs.zfs.l2arc_norw=0

vfs.zfs.l2arc_feed_again=1

vfs.zfs.l2arc_noprefetch=1

vfs.zfs.l2arc_feed_min_ms=200

vfs.zfs.l2arc_feed_secs=1

vfs.zfs.l2arc_headroom=2

vfs.zfs.l2arc_write_boost=2500000000

vfs.zfs.l2arc_write_max=2500000000

vfs.zfs.arc_meta_limit=30890250000

vfs.zfs.arc_free_target=696043

vfs.zfs.arc_kmem_cache_reap_retry_ms=1000

vfs.zfs.compressed_arc_enabled=1

vfs.zfs.arc_grow_retry=60

vfs.zfs.arc_shrink_shift=7

vfs.zfs.arc_average_blocksize=8192

vfs.zfs.arc_no_grow_shift=5

vfs.zfs.arc_min=16593888768

vfs.zfs.arc_max=123561000000

vfs.zfs.abd_chunk_size=4096

vfs.zfs.abd_scatter_enabled=1

vm.kmem_size=133824851968

vm.kmem_size_scale=1

vm.kmem_size_min=0

vm.kmem_size_max=1319413950874

----------------------------------------------------------------------

Additional Settings (Test):

For ESXi iSCSI:

Note see: https://www.ixsystems.com/community/threads/iscsi-multipathing-slower-then-single-path-for-writes.22475/post-134650

Set pool recordsize to 1M, atime=off, sync=always (with SLOG)

Set zvol blocksize 64K

Set iscsi extant logical block size 512 (This is the only setting I could get to work in ESXi 6.7 even "Disable physical block size reporting")